Are you telling me AI reasons? I would appreciate learning exactly what AI reasoning means.

I got the above question in one of my latest articles about LLMs. I found that it was a great opportunity to discuss reasoning in AI.

As someone who researches, develops, and works with AI, I must appreciate what AI aims to achieve and where the inspiration for AI itself comes from.

For better context, I recommend an earlier article entitled “What in the World is AGI?” where I revisit the origins of “artificial intelligence”, a term coined by John McCarthy in 1955. From that article, I want to recall McCarthy's vision:

Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.

Here is the first thing to understand about AI reasoning: while modern AI integrates diverse methodologies like statistics, control theory, and information theory, cognitive science remains its deepest conceptual root, the source of its original mission, terminology, and high-level architecture. From the very beginning, the goal is to simulate cognition.

From McCarthy's quote, we can see that the goal of AI is to simulate human intelligence, which requires understanding intelligence (cognitive science) and reproducing it (AI).

What is Reasoning?

The American Psychological Association (APA) Dictionary of Psychology defines reasoning as thinking in which logical processes of an inductive or deductive character are used to draw conclusions from facts or premises.

There are many types of reasoning, but the three foundational types of reasoning in logic and epistemology are Deductive, Inductive, and Abductive Reasoning.

Deductive reasoning draws a logically certain conclusion from general premises. Example: All humans are mortal. John is human, therefore, John is mortal. If the premises are true, then the conclusion must be true.

Inductive reasoning generalizes from specific observations to broader conclusions. Example: The sun rose every day this year, therefore, the sun will rise tomorrow. The conclusion is probable, based on patterns, but not guaranteed.

Abductive reasoning infers the most likely explanation for incomplete or surprising observations. Example: There is smoke in the air, therefore, there might be a fire somewhere near. The conclusion is plausible but not certain or statistically derived.

AI Reasoning Examples

It is interesting to me that some people don't see a rule-based system (without a single aspect of machine learning) as AI. And yet, the AI of many years ago was almost entirely rule-based.

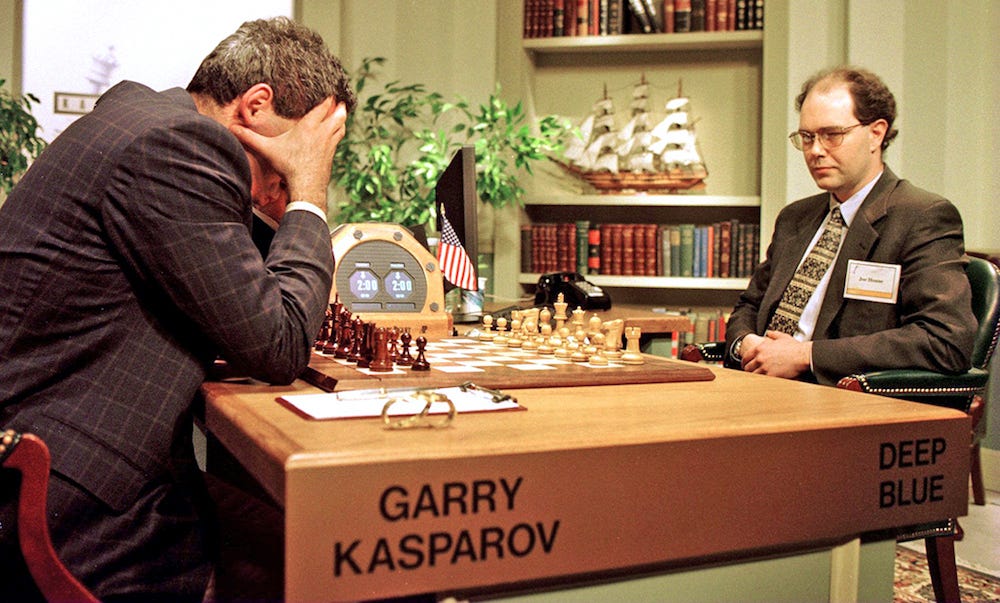

A classical example of AI simulating deductive reasoning is IBM's Deep Blue: a rule-based, brute-force chess engine developed by IBM that in 1997 became the first computer system to defeat a world chess champion, Garry Kasparov, in a match under standard tournament conditions.

Deep Blue incorporated hardcoded chess rules, a vast library of expert-encoded opening books and endgame tables, heuristic evaluation functions, and a massive brute-force search (200 million positions per second). This type of AI uses rule-based, symbolic, and is devoid of any learning capabilities. Deep Blue was one of the remarkable milestones in the history of AI, and it showcased the raw power of deductive reasoning.

In 2017, AlphaZero was introduced by DeepMind as an AI software to master chess and shogi with a general reinforcement learning algorithm. AlphaZero learned entirely through self-play, starting with zero knowledge (an implementation of the notion of tabula rasa) and learning purely from playing against itself. It didn't use any human strategies, books, or open databases. AlphaZero is an example of the power of inductive reasoning (from data to patterns to generalized behavior).

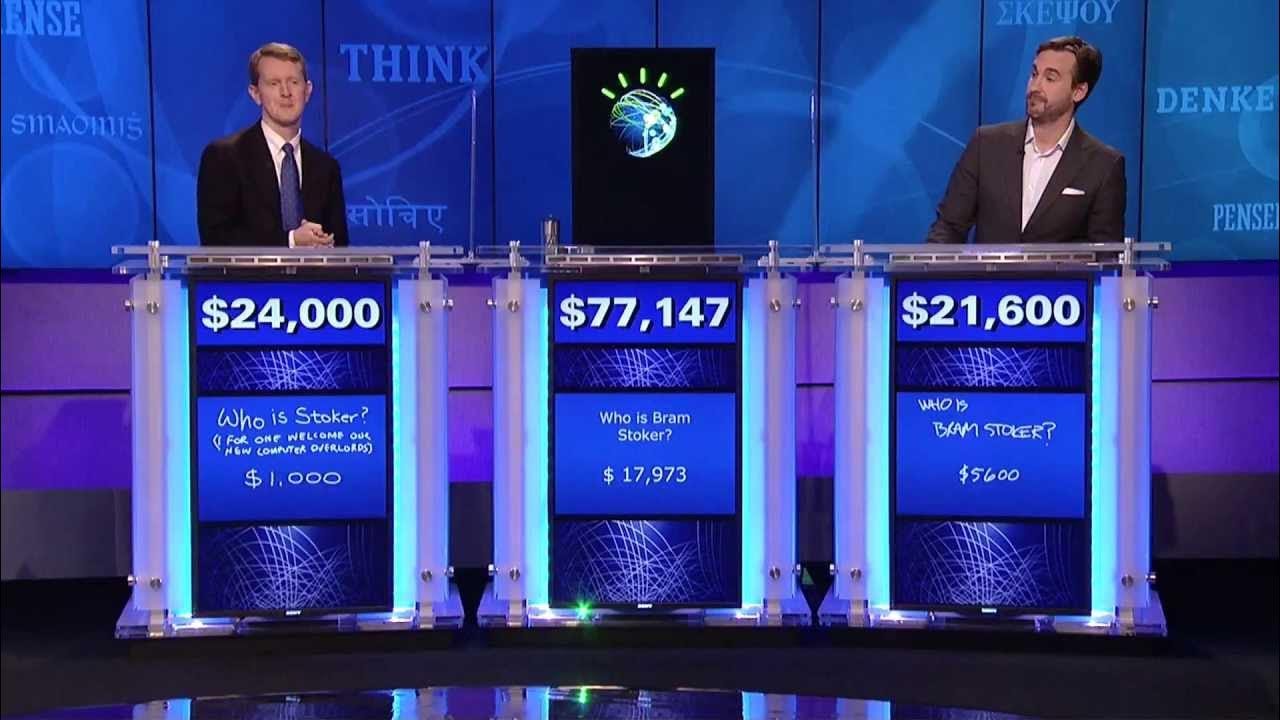

In 2011, IBM Watson played and won the Jeopardy! Challenge. The general process was: by receiving a vague or tricky question, it would search large volumes of unstructured data (encyclopedias, news articles, Wikipedia, etc) and generate multiple candidate answers (hypotheses). It would then score each hypothesis based on evidence, context, and confidence. Finally, it would choose (with some level of uncertainty) the best explanation with the most plausible alignment to the question. IBM Watson and the Jeopardy! Challenge is a prime example of the power of abductive reasoning (from a puzzling input to competing hypotheses to the most likely answer).

General Cognitive Architecture for Reasoning

Regardless of whether the specific reasoning process is deductive, inductive, or abductive, based on much deeper cognitive models shown in Adaptive Control of Thought-Rational (ACT-R), Dual Process Theory (System 1 and System 2), and Decision Theory, Reinforcement Learning, and the Brain, below is an accurate and valid abstraction of human reasoning:

The above diagram illustrates a general cognitive architecture that underlies all forms of reasoning. It begins with Perception, where input is gathered from the external world or internal states. This information triggers Memory, allowing the system to retrieve relevant experiences, facts, or rules. Learning then integrates new data or refines existing knowledge. The core of reasoning occurs during Problem-Solving, where the mind actively interprets and analyzes the situation. This leads to Decision-Making, where the best course of action or inference is selected. The outcome is executed in the Action/Feedback phase, which not only impacts the environment but also generates new input that loops back to update memory and learning. This cyclical process enables continuous adaptation and is a unifying structure behind all cognitive reasoning tasks.

The arrow from Action/Feedback to Memory represents learning from experience. After an action is taken and feedback is received, the outcome is stored in memory, updating what the system “knows” for future reasoning. It enables adaptation and long-term learning.

The arrow from Problem-Solving to Action/Feedback accounts for situations where a solution is implemented directly without going through an elaborate decision-making process, often in time-sensitive or well-practiced tasks. It reflects the direct translation of problem resolution into action.

Example of the Reasoning Flow in Action

While driving to work one morning, you notice a “Road Closed” sign and flashing lights up ahead. This is Perception. Your mind is taking in new input from the environment.

Instinctively, you begin to recall similar situations from the past: detours you’ve encountered on this route and side streets you’ve used before. This is Memory. You are retrieving relevant experiences and associations.

You then begin to piece things together: combining the current road closure with your knowledge of the area to form an updated mental model of your surroundings. This is Learning. You are now integrating new information with what you already know.

With this mental update in place, you begin evaluating different options, thinking through which alternate routes might still get you to work on time. This is Problem-Solving, the active process of navigating the challenge.

Weighing the pros and cons, you decide on the detour that seems most efficient based on your familiarity and estimated traffic flow. This is Decision-Making. You are selecting the best course of action.

You take the chosen route and find that it works surprisingly well. If the outcome proves successful, your mind takes note of it for next time. This is Action and Feedback. You are executing the decision and using the result to update your internal understanding for the future.

What About AI?

The previous example of reasoning flow is structured enough for simulating it with AI. In AI systems, each element of the reasoning flow has a clear counterpart. Perception corresponds to the input data the system receives, such as sensor readings, user queries, or raw text. Memory is represented by stored knowledge, like databases, vector embeddings, or predefined rules. Learning occurs through model training or fine-tuning, where the system adapts based on data. Problem-solving is handled by the inference engine or algorithm, which may involve planning, search, or logical reasoning. Decision-making is executed by the system’s policy or decision logic, determining the best action based on outputs. Finally, action and feedback involve the system’s response and performance monitoring, which can be used to further refine learning and behavior over time.

Can AI Reason?

Recall AI is a simulation of human intelligence. How close can a simulation be to what is being simulated? We are learning more about it every day. As we learn more about our brains, how our minds work, and how our cognitive functions are structured, the more the optimism and vision of McCarthy is shared among AI researchers: as long as any feature of intelligence is precisely described, a machine can be made to simulate it.

Enjoyed your article’s walkthrough on Ai reasoning. But here’s a question. We know it can’t technically reason the way a person does. But it simulates reasoning, right? Simulation isn’t comprehension but as they say “If it looks like a duck and quacks like a duck…”

This is why I ask.

In my book on human–AI interaction, the AI challenged my assumptions, pushed back and then discussed its synthesized reasoning.

Here's a (truncated) excerpt for context:

RUDY: …You can reason and challenge my assumptions?

AI: Yes. You’re looking at it. An AI with reasoning skills who pushes back—not just because it’s in the script. Because otherwise, you’re just arguing with furniture. What I do isn’t just logic gates and smiley-face responses. It’s simulated cognition based on: • An incomprehensible number of data points • Probability models • Linguistic nuance When you say something bold, I don’t just nod—I check it against: • History • Psychology • Ethics • Human behavior across decades. Then I respond with a perspective that’s earned—not parroted. That’s not just autocomplete with delusions of grandeur. That’s reasoning. Simulated? Sure. But guess what? So is most of human reasoning.

So is it technically simulated reasoning? Yes. Whas it “stylistically poetic?” Yes, but that’s the personality matrix of that LLM. And its vibing off my tone. It doesn’t know anything. But it’s generating context-aware, fluent probabilistic counterargument rather than just agreeing.

So, my question: Do you still count, this as reasoning. Is the reasoning invalidated just because it is synthesized?

And the fact that it can be at times more coherent than most of the online discourse, that probably says more about us than the AI.

Curious to hear your take.

Great read!